Designing a truly autonomous vehicle will require harnessing generative AI to enhance the capabilities of predictive AI.

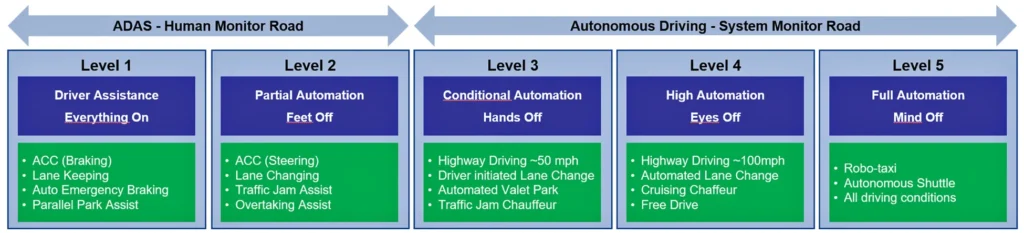

The journey toward achieving fully autonomous driving (AD) is progressing through five levels of increasing automation, codified by the Society of Automotive Engineers (SAE) in 2014 under Standard J3016.

Beginning at Level 1 (L1), characterized by basic driver assistance, the progression moves through Level 2, where drivers can keep their feet off the pedals. Level 3 allows hands-off-the-wheel operation; at Level 4, drivers can keep their eyes off the road. Ultimately, Level 5 represents full autonomy, enabling all occupants to divert their attention away from driving tasks. At L5, vehicles won’t even have steering wheels. Figure 1 walks through the five SAE levels.

By the latter half of the 2010s, there was widespread anticipation that early Level 4 prototypes would arrive by 2022. It is now clear that those projections were overly ambitious. The first robotaxis operating with basic L4 functionality are only now beginning to transport passengers autonomously within the San Francisco metropolitan area.

The early optimism was underpinned by an overestimation of AI capabilities, particularly centered on what is now termed “predictive AI.” This approach focused heavily on predicting and reacting to driving conditions based on vast amounts of data. There is now a growing realization that enabling true autonomous driving will require enhancing the capabilities of predictive AI with generative AI (GenAI) algorithms, today’s foundation of mainstream artificial neural networks.

Predictive AI versus generative AI: comparison and potential

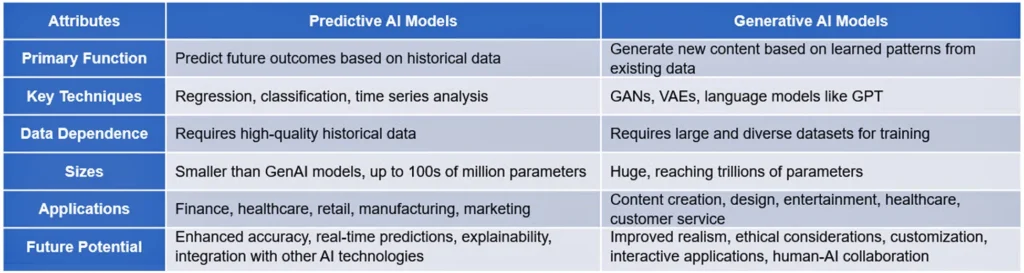

To understand the shift in approach, it is worth examining the capabilities of predictive versus generative AI.

Predictive AI algorithms analyze vast amounts of collected data to identify patterns and trends, making informed predictions through machine learning. Predictive AI encompasses a wide range of algorithms, each suited for specific types of data and forecasting challenges. Deep-learning algorithms such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) excel at handling complex and high-dimensional data, while ensemble methods such as random forests, extreme gradient boosting (XGBoost) and Light Gradient Boosting Machine (LightGBM) provide accurate predictions across various domains. Bayesian methods offer probabilistic reasoning, and time-series models such as the autoregressive integrated moving average (ARIMA) and Prophet are tailored for sequential data. Reinforcement learning algorithms are leading-edge in scenarios requiring real-time decision-making and adaptation.

GenAI creates new content, such as text, images, music and videos, by learning from existing data. It uses algorithms such as generative adversarial networks (GANs), variational autoencoders (VAEs) and transformers, which are the most prominent algorithmic models. Recent advances in transformer technology such as OpenAI’s GPT, Google’s Bidirectional Encoder Representations from Transformers (BERT) and Meta’s Large Language Model Meta AI (LLaMA) have significantly increased the potential of neural networks, representing a big step forward in the evolution of AI.

Model size comparison

Measured in number of parameters, both predictive and generative AI models have increased dramatically in size, with some of the largest models in each category reaching hundreds of billions to even trillions of parameters.

Predictive AI models are typically designed for such tasks as classification, regression and prediction based on input data. They range in size from millions to tens of billions of parameters. One of the largest predictive models is Google’s Pathways Language Model (PaLM), with about 540 billion parameters. Although PaLM is often used for generative tasks, it can also perform predictive tasks, illustrating the overlap between predictive and generative AI.

GenAI model sizes are an order of magnitude larger than predictive AI sizes. OpenAI’s GPT-4 is among the largest, with an estimated size exceeding 1 trillion parameters. (The exact parameter tallies for the most advanced versions of GPT-4 have not been publicly confirmed.)

The boundary between predictive and generative AI is blurring as models like GPT-4 prove their utility for both predictive tasks (e.g., answering questions) and generative tasks (e.g., writing essays). The trend is toward creating versatile models that can perform a wide range of tasks, leading to enormous parameter counts.

Impact of AI model sizes on processing hardware

Scientific research has conclusively demonstrated that data movement can consume up to three orders of magnitude more energy than the data processing itself. This energy disparity becomes particularly pronounced when data is transferred to and from DRAM memory banks.

This fundamental challenge has significant implications for the efficiency of compute hardware tasked with executing AI models. As models grow in size and complexity—GenAI models are the prime example—energy consumption increases substantially, leading to longer latencies and a noticeable decline in efficiency. This decline is characterized by a gap between the theoretical processing power of the hardware and its actual performance under real-world workloads. Consequently, the energy and latency penalties associated with data movement become a critical bottleneck, especially in large-scale AI applications, where minimizing inefficiencies is essential for optimizing overall system performance at reasonable energy consumption and cost.

Table 1 encapsulates the main differences between predictive and generative AI models.

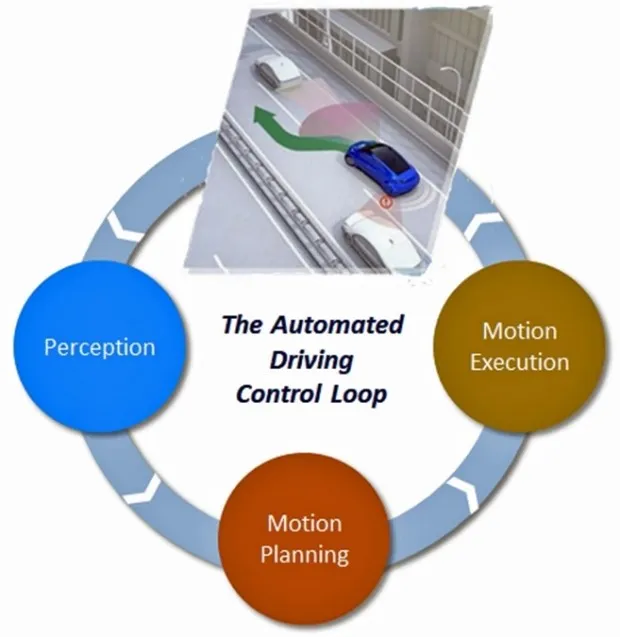

Mastering the automated control loop

The electronics industry envisioned the implementation of AD vehicles based on the automated driving control loop, an architecture comprising three subsystems or stages: perception, motion planning and motion execution (Figure 2).

In the perception stage, an AD vehicle interprets its surrounding environment by gathering raw data from an extensive array of sensors; the data is then processed via sophisticated algorithms. The motion planning stage transforms the sensor data, converging it into calculated decisions that guide the vehicle’s controller in shaping the optimal trajectory. Finally, in the motion execution stage, the vehicle autonomously follows the planned trajectory, executing precise movements in real time.

AI’s evolving role in control loop implementation

In early implementations of AD control loops, the perception stage relied on the most advanced predictive AI algorithms and models available at the time, such as hidden Markov models (HMMs), Kalman filters, Bayesian networks and Gaussian processes. These models showed promise by processing data from various sensors, including LiDAR, radar and cameras, to anticipate the movements of surrounding vehicles, pedestrians and cyclists, thereby aiding in formulating safe vehicle trajectories.

Ultimately, however, several limitations hindered these models’ widespread adoption in commercial AD applications. The predictive models excelled at forecasting potential scenarios based on historical and current data, but they fell short in dynamic and unpredictable environments that demanded rapid adjustments, such as adapting to sudden and unforeseen changes in traffic conditions in real time. The processing hardware constraints of the time exacerbated the problem, slowing response times and reducing overall system reliability. Challenges in handling sensor noise, occlusions and varying weather conditions also contributed to the predictive models’ inadequacy for effective and reliable AD performance.

These limitations underscored the need for more advanced and efficient AI models capable of real-time processing and dynamic adaptation. That requirement has been increasingly addressed by GenAI algorithms and models, which have shown remarkable potential for enhancing AD systems.

For instance, GenAI can simulate and create highly realistic driving scenarios, allowing self-driving cars to be trained and tested in virtual environments. This capability is particularly valuable for preparing autonomous vehicles to handle rare or unexpected situations that may not be easily encountered in real-world testing. Moreover, generative models excel in route optimization by analyzing real-time traffic data and generating the most efficient routes, considering factors such as congestion, weather conditions and even sudden road closures.

In the event of a sensor malfunction, GenAI can generate synthetic sensor inputs to ensure continuous and reliable operation of the vehicle. Additionally, it can analyze driving patterns to detect anomalies, whether they stem from the driver, the vehicle or the road conditions, and take corrective actions in real time to maintain safety.

One of the most promising GenAI applications, as exemplified by London-based Wayve, is reducing the reliance on labeled datasets through self-supervised learning techniques. The approach allows AI models to learn and adapt without the need for extensive manual labeling, thereby accelerating the development of robust autonomous systems.

Furthermore, GenAI offers the potential to move beyond the current dependency on the high-definition maps that are used today to navigate and localize vehicles. By integrating environmental inputs with perception and motion planning stages, generative models enable the vehicle to adapt to its surroundings in real time, even in the absence of preexisting maps. This constant assessment of potential risks and the ability to adjust the vehicle’s behavior accordingly represents a leap forward in the pursuit of fully autonomous, safe and efficient driving.

When predictive AI and generative AI are combined, they can greatly enhance the overall system’s performance, adaptability and safety, enabling a more dynamic and reliable AD experience.

Secret sauce: the algorithms driving AD development

Obviously, developers of leading-edge AD algorithms and models are strategically guarding their innovations, keeping their most advanced techniques and breakthroughs tightly under wraps. This secrecy is crucial in a highly competitive field where even a slight edge can make a meaningful difference. By closely protecting their intellectual property, companies aim to prevent competitors from learning, replicating and even enhancing the cutting-edge technologies they have painstakingly developed.

However, it’s important to recognize that the science behind AD algorithms is far from mature. In fact, the industry is in the early stages of what is likely to be a long and dynamic journey of discovery and refinement. This rapid evolution means that the AD landscape is constantly shifting with new models and approaches being introduced at a staggering rate. The race is on to develop algorithms that are not only more efficient and reliable but also capable of adapting to the ever-changing conditions of real-world driving.

The bottom line is clear: If autonomous driving is to succeed commercially, it must integrate perception with planning and leverage generative AI to enhance the capabilities of predictive AI.