Editor’s Note: Jean-Marie Brunet, Vice President and General Manager of hardware-assisted verification at Siemens, recently addressed the rapid growth of a specialized area in the chip design and verification space called hardware-assisted verification.

In a conversation with Lauro Rizzatti, Brunet offers a unique perspective on verification, a result of his product management, operation, engineering and marketing responsibilities. He also considers the rapid growth of hardware-assisted verification, market segment-based requirements and how verification tool providers stay ahead of the market. The discussion concludes with a look at how hardware-assisted verification and validation will evolve over the next few years.

Rizzatti. There’s been a lot said lately about rapid growth of hardware-assisted verification tools. What do you attribute this growth to?

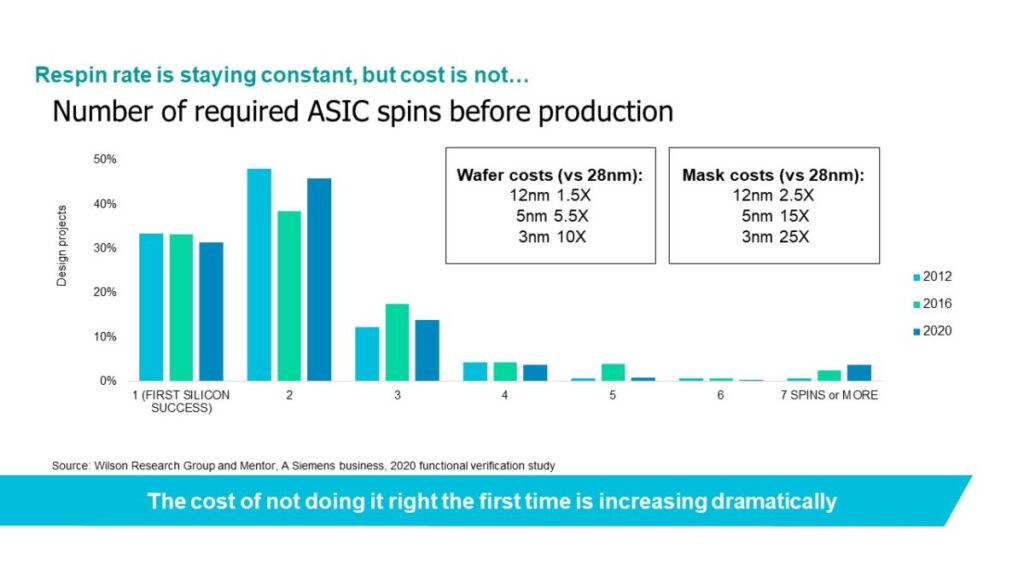

Brunet: The basic reason is that it has become much more expensive to design and develop new chips. The verification and validation cost is scaling with the complexity of the new devices. The cost of not doing it right the first time is increasing dramatically. The justification to invest in hardware-assisted verification is there, which is why this market segment is growing significantly in revenue.

Figure #1 caption: While the number of required ASIC respins before production has stayed contact, the cost of a respin is escalating. Source: Siemens

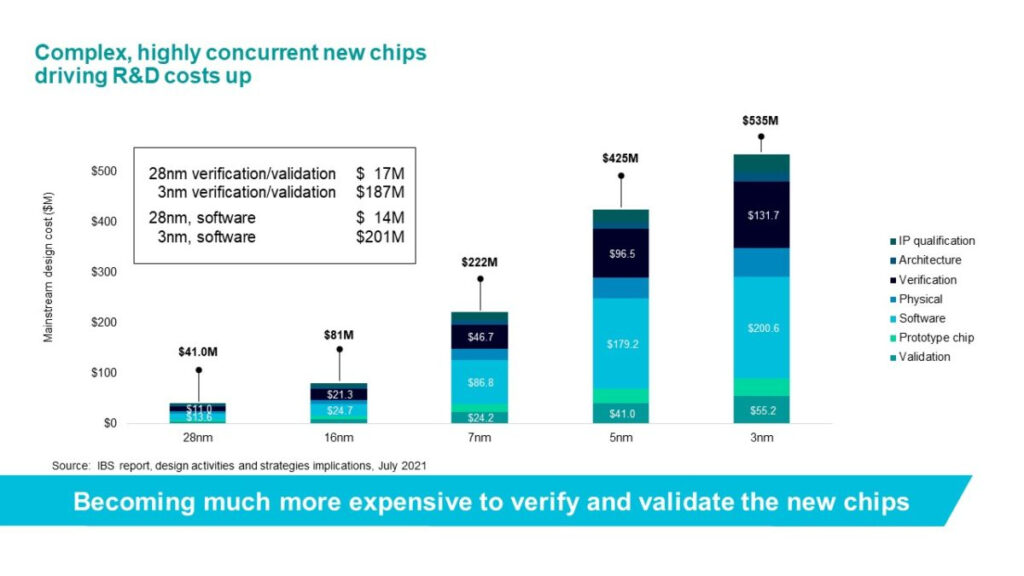

First-time silicon success is exceedingly important. Of course, the cost per technology generation, IP verification and architecture and verification has also grown. At 28nm, verification and validation was $17 million, software $14 million. At 3nm, it’s $187 million and $201 million, respectively.

Figure #2 caption: The cost of verification and validation has grown more expensive with each new process technology. Source: Siemens

Rizzatti: Are market segments such as HPC, automotive and storage moving at the same pace with the same general verification requirements or are there differences?

Brunet: These three different growing markets have distinct verification challenges, different requirements for a verification and validation methodology and the supporting tools. Whereas shift-left is a common methodology for all three.

High-performance compute (HPC) is all about capacity and follows the traditional semiconductor curve for processors and microprocessors. High-performance computing includes CPUs, GPUs and all the new AI/ML techniques. The key design and verification requirement is capacity scalability. These chips usually start small then grow quickly to large 10- or 15-billion gates devices. It’s a binary system where either it can be verified, or it cannot. Emulation’s capability limits are the trigger. Because of this an HPC verification group needs to have a methodology that relies less on the emulated logic and relies more on virtual models for what doesn’t belong/fit in the emulator.

The automotive market is different and going full throttle to pack as much technology as possible into chips. The key verification challenge is the ability to verify the device within the context of the car’s system. That takes system-level modeling, abstraction and basic modeling and the ability to integrate different type of stimulus and different types of sensors and actuators. Most could be virtual and have the ability to send stimulus to a chip that then reacts accurately within the system requirement.

Storage follows a different trend. Verification is moving toward virtualization here. It used to be in-circuit emulation (ICE) environments. Now most leaders in this space have moved to virtualization. They no longer have an entire ICE environment. It’s hybrid with some components that still require ICE because they are proprietary and confidential. Certain memory device components have an ICE load, but the surrounding components have been moved to virtualization. Virtualization is enabling pre-silicon characterization of important key performance indicators related to storage.

Rizzatti: How does a company like Siemens support all three markets with three different sets of requirements?

Brunet: It requires communication and discussions early on with key customers to help define their needs years ahead. From the needs that we gather, we select certain vertical markets that are poised for growth. We purposely do not target some markets with our hardware-assisted verification architecture because the requirements don’t match our core competencies.

Those described above –– HPC that is about capacity, automotive is about system-level modeling and the ability at any point in time to change the accuracy of the model fidelity and storage moving to virtual –– they are absolute sweet spots for the Veloce platform. Many other vertical segments have a great appetite for hardware-assisted verification, and we are also developing unique solutions for them.

Rizzatti: In the hardware-assisted verification space, technology providers must be several years ahead of chip design to be ready to meet new requirements. In general, how difficult is that planning scenario?

Brunet: It’s easier for us to plan as part of Siemens where we use a rigorous five-year planning process and focus on long-term investment management. At any point, we need to have a fully funded product strategy five years ahead.

CapEx investments are important to plan correctly and that’s what we do. Every year, we revisit our five-year fully funded program by group or by division. It’s allowing us to put a long-term strategy in place that has helped tremendously because of our hardware portfolio. We design the chip ourselves and therefore have a long planning and execution cycle. We are going to execute the device and that requires knowing which process node will serve the program best and when capacity on that process node will be available. We are following very closely process technology scaling and advanced nodes to select the process technology node that serves us the best.

That ability to plan based on process technology availability for ramp up of production is an exercise we have been doing for many years.

We also purchase FPGA devices for our prototyping offering. Here, it’s more of a discussion with suppliers to understand their roadmap and plan our roadmap to what is doable from their process technology and availability.

Another category is software where we develop a detailed understanding of what matters to customers today and within a couple of years. We do that by looking at trends and where we make our investment in headcount to be sure that the software we develop for the hardware is addressing specific needs.

Rizzatti: How do you see the verification and more specifically, hardware-assisted verification evolving in the next few years?

Brunet: It’s easy for me to answer this because I see two parallel and related threads. One is the hardware, and the other is the software. On the hardware level, there is an appetite to have hardware-assisted verification solutions or products addressing three spaces.

One is a real emulator for compilation and debug of large chips. The second is emulation offload defining a second generation of hardware that is congruent with the emulator and serves a well-defined purpose. When the design is at the full RTL, it’s less about debug and more about performance.

This is where a second hardware system is needed. A recent announcement claims all verification and validation can be done with one hardware system. I don’t agree. Verification engineers cannot do everything with one hardware system. One FPGA-based hardware environment can target performance and take a similar model from the emulator to an enterprise prototyping type of solution. It’s a simple high-level business value proposition to improve the cycle and reduce the cost of the verification by installing more cycles and a cost-effective machine.

The third category is traditional prototyping, related to the end-customer use model with close to at speed peripherals and software development. It doesn’t have to be full chip. It can be smaller in size or big as well, but this is a different type of requirement.

In terms of the software, we see a business need for software workload acceleration. The days of taking functional vectors usually used for simulation to do a workload or mimic what’s happening on silicon and power are over. Customers want to run early with real live software workloads.

Those are the two domains that could be perceived as distinct or parallel. The strategy moving forward is the ability to run software workloads to the hardware with different types of hardware without changing too much of the environment. It’s inefficient to change everything but it is rather more efficient to be running/executing within the same platform and move back and forth from early on at the IP level to subsystem and all the way to the full RTL and final system.