Training and inferencing comprise two crucial aspects of AI processing in datacenters. Learn the differences between the two, and the cost-efficiency issues involved.

The execution of artificial intelligence (AI) workloads in datacenters (Figure 1) involves two crucial processes: training and inference. At first glance, these processes appear similar—both involve reading data, processing it, and generating outputs. A closer examination reveals significant differences between them.

Training vs. Inference in Datacenters: Key Differences

The exploding complexity of AI models exemplified by large language models (LLMs) with hundreds of billions or even trillions of parameters has been driving unprecedented computational demands for both training and inference. Yet, their operational requirements and priorities diverge significantly.

Computational Performance

Training is an exceptionally computationally intensive process, demanding ExaFLOPS of power to analyze and extract patterns from vast, often unstructured datasets. This process can span weeks or even months, as models undergo iterative refinement to achieve high accuracy.

Inference, while also computationally demanding, operates on a smaller scale typically measured in PetaFLOPS. Its focus is narrower, applying trained models to specific tasks, such as responding to user queries, making it more targeted and streamlined.

Response Time

For training, accuracy takes precedence over speed. The process involves long runtimes with models running continuously to fine-tune outputs and reduce the likelihood of hallucinations.

Inference, on the other hand, prioritize speed. It must deliver results almost instantaneously to meet user expectations with response times often measured in milliseconds up to a few seconds.

Latency

Latency is a secondary concern during training as the emphasis is on achieving precise and reliable outcomes rather than immediate results.

Conversely, inference depends on low latency to maintain smooth user experiences. High-latency responses can disrupt interactions, making latency a crucial metric for performance.

Precision

Training requires high precision typically using formats such as fp32 or fp64 to ensure models are reliable and minimize errors. This high level of precision demands significant processing power and continuous operation.

Inference balances accuracy with efficiency by employing lower precision formats like fp8 for many applications.

These formats significantly reduce computational demands without compromising the quality needed for effective results.

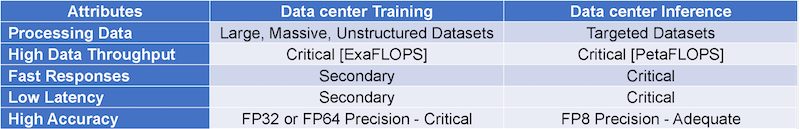

Table 1 illustrates these distinctions across five key attributes, emphasizing how training and inference optimize performance, precision, and efficiency to address the unique requirements of AI workloads.

Infrastructure Challenges: Power and Cost

The enormous computational demands of data centers necessitate rows of specialized hardware housed in robust, heavy-duty cabinets designed to accommodate large, high-performance systems. These setups consume energy on a massive scale, often measured in gigawatts, which generate significant heat and require extensive cooling systems along with regular, specialized maintenance to ensure optimal operation.

Data centers tailored for AI processing come with exceptionally high costs. These expenses stem from multiple factors: the acquisition of cutting-edge hardware, the substantial investment in facility construction, the regular maintenance performed by skilled personnel, and the relentless energy consumption required to operate 24/7 throughout the year.

In training, the focus remains on producing accurate models, often sidelining cost considerations. The prevailing mindset is to “get the job done, whatever the cost.”

Inference, by contrast, is highly cost sensitive. The cost per query becomes a vital metric, particularly for applications managing millions or even billions of queries daily. A 2022 analysis by McKinsey illustrates the constraints for high-throughput AI systems. For instance, Google Search, handling approximately 100,000 queries per second, targets a cost of around $0.002 per query to remain economically viable. In comparison, ChatGPT-3’s cost per query, though not directly comparable due to differences in general-purpose and specialized use cases—was estimated at roughly $0.03 per query, highlighting the gap in efficiency required to reach Google-level query economics.

Power efficiency presents a critical balancing act. While inference generally consumes less power than training, improving energy efficiency in inference can significantly lower costs and reduce environmental impact. Enhancements in this area allow data centers to either deliver greater computational power within existing energy constraints or decrease the cost per compute unit by reducing cooling and infrastructure requirements.

This landscape underscores an urgent need for innovative solutions that transcend the traditional tradeoff between computational efficiency and cost. By addressing these challenges, the next generation of AI advancements in data centers can achieve breakthroughs in performance, scalability, and sustainability.

State of AI Accelerators for Training and Inference

Current data center AI accelerators, predominantly powered by graphics processing units (GPUs), are employed for both training and inference. While a single GPU device can deliver performance on the scale of PetaFLOPS, its design architecture—originally optimized for graphics acceleration—struggles to meet the stringent requirements of latency, power consumption, and cost efficiency necessary for inference.

The interchangeable use of GPUs for both training and inference lies at the heart of the problem. Despite their computational power, GPUs fail to achieve the cost-per-query benchmarks needed for economically scalable AI solutions.

Limitations Rooted in Physics and Technology

GPUs boost data processing performance but do not enhance data movement throughput. The gap stems from fundamental physical and technological constraints:

- Energy Dissipation in Conductors: When electrical power flows through conductors, energy dissipation is inevitable. Longer conductors result in greater energy losses, compounding inefficiencies.

- Memory versus Logic Power Dissipation: A corollary from the rule postulates that the energy dissipated by memory operations can reach up to 1,000 times the energy consumed by the logic used for processing data. This disparity has been concisely described as the memory wall and it highlights the need for innovations in memory and data access strategies to optimize power efficiency.

Attempts at Conquering the Memory Wall

The memory wall refers to the growing disparity between processor performance and memory bandwidth, a gap that has widened significantly over the past 30 years. This imbalance reduces processor efficiency, increases power consumption and limits scalability

A commonly used solution, refined over time, involves buffering the memory channel near the processor by introducing a multi-level hierarchical cache. By caching frequently accessed data, the data path is significantly shortened, improving performance.

Moving down the memory hierarchy, the storage fabric transitions from individual-bit addressable registers to tightly coupled memory (TCM), scratchpad memory, and cache memory. While this progression increases storage capacity, it also reduces execution speed because more cycles are needed to move data in and out of memory.

The deeper the memory hierarchy, the greater the impact on latency, ultimately reducing processor efficiency.

The inherent potential of AI, specifically, generative AI, and more so, agentic AI, is severely encumbered by the limited bandwidth of memories. While GPUs are the preferred choice for AI training in data centers, their efficiency varies greatly depending on the algorithm. For example, efficiency reportedly drops to just 3–5% on GPT-4 MoE (Mixture of Experts) but can reach around 30% on Llama3-7B.

Bridging the Gap: The Path Forward

In an ideal world, replacing TCM, scratchpad memory, and cache with registers would revolutionize performance. This approach would transform the hierarchical memory structure into a single, large, high-bandwidth memory directly accessible in one clock cycle.

Such an architecture would deliver high execution speed, low latency, and reduced power consumption while minimizing silicon area. Crucially, loading new data from external memory into the registers during processing would not disrupt system throughput.

This advanced design has the potential to significantly improve processor efficiency, particularly for handling resource-intensive tasks. Current GPUs may struggle to keep up, potentially running out of capacity when tasked with processing LLMs that exceed one-trillion parameters. However, this innovative memory architecture ensures seamless handling of similar high-demand workloads, delivering exceptional performance without bottlenecks that can hinder traditional GPU setups. This breakthrough could redefine how complex computations are approached, enabling new possibilities in AI and beyond.

Balancing Computational Power and Economic Viability

To ensure scalable AI solutions, it is essential to strike a balance between raw computational power and cost considerations. This challenge is underscored by projections that LLM inference will dominate data center workloads by 2028. Analysts from Moody’s and BlackRock forecast that the rapid growth of generative AI and natural language processing will drive substantial upgrades in data center infrastructure.

This growth demands strategies to reduce reliance on expensive accelerators while simultaneously enhancing performance. Emerging technologies, such as application-specific integrated circuits (ASICs) and tensor processing units (TPUs), offer a promising path forward. These specialized architectures are designed to optimize inference workloads, prioritizing efficiency in latency, power consumption, and cost.

Rethinking Hardware and Software for AI

Addressing the unique demands of AI inference requires a paradigm shift in hardware and system design. By integrating innovative architectures and reimagining the supporting software ecosystem, datacenters can overcome the traditional tradeoffs between computational efficiency and economic feasibility.

As inference workloads increasingly shape AI’s future, overcoming challenges in latency and power consumption is critical. By focusing on cost-efficient and high-performance solutions, the industry can ensure the sustainable deployment of AI technologies. This will pave the way for a future where AI-driven insights are accessible and affordable on a global scale, enabling transformative real-world applications.

All images used courtesy of VSORA.