Source: EDN

My [LR’s] first exposure to hardware emulation happened circa 1995 upon visiting a major processor firm in Austin, Texas. Its lab was jam-packed from floor to ceiling with monstrous hardware emulators of different generations from Quickturn, the leader at the time.

What shocked me was the sight of a huge and messy bundle of cables that connected the design-under-test (DUT) – the processor design mapped inside the emulator – to a socket on a PC motherboard in place of the yet-to-be-taped-out processor. In the industry, this setup was called in-circuit emulation (ICE). Behind closed doors, the engineers called it spaghetti cables. It had a mean time between failures (MTBF) of a few hours. Signs all over the lab warned personnel about stepping on the cables.

Back then, ICE was the only deployment mode of an emulator, and the very reason for its existence. It allowed testing of the DUT with real-world traffic. The alternative was to exercise the DUT with test vectors generated by a software-based testbench executed on a gate-level or register transfer level (RTL) simulator.

Needless to say, testing the DUT in the context of a real-world testbed had an allure that no testbench could ever match. The advantage forced emulation users to endure pain, frustration, and discouragement, and caused design managers to blow through their tool budgets. Early emulators were non-shareable resources, only used on-site, with price tags that put them in the capital equipment purchase category.

Testbench simulation and ICE were based on two separated test environments that did not share any commonality in those days of ASIC designs. Simulation was used from the early stages of the design cycle all the way to full ASIC-level verification. ICE was supposed to be the icing-on-the-cake for final system-level validation. But often the icing was not ready before serving the cake. Namely, the DUT was ready for emulation after first silicon samples came back from the foundry, defeating the purpose.

In the mid-1990s, the industry began a long journey to bridge the gap between ICE and simulation. Unknown back then, the solution would come in the form of ICE virtualization: the creation of a testbed functionally equivalent to ICE. It took many years of improvements in simulation, emulation, and testbench technology to reach a point where co-emulation (a.k.a., co-modeling or transaction-based acceleration) became the primary choice over ICE.

Ironically, spaghetti cables have been replaced by a soup of acronyms – a welcome tradeoff given the many advantages of virtualization. A short list includes:

- PLI: Programming language interface

- API: Application programming language

- DPI: Direct programming interface

- SCE-MI: Standard Co-Emulation Modeling Interface

- BFM: Bus Functional Model

- TLM: Transaction-Level Modeling

- UVM: Universal Verification Methodology

- and many more.

But we’re getting ahead of ourselves.

Early co-simulation acceleration via the Verilog PLI

The first integration between an emulator and a simulator was devised in the mid-1990s. At the time, testbenches were written in the Verilog hardware description language (HDL), and the integration was based on the IEEE Verilog PLI standard. The PLI provided a mechanism for Verilog code to call functions written in the C programming language. The Verilog PLI standard suffered from several drawbacks. It was:

- rather awkward and difficult to use,

- signal-oriented, dragging down simulation execution, and – dramatically – emulation speed,

- not user friendly due to a call back-based response for value change detection.

All emulation vendors at the time offered a capability promoted as co-simulation or (cycle-based) simulation acceleration. The use of “acceleration” was a misnomer by a mile. Indeed, it was like driving a Ferrari pulling a big trailer filled with 10 tons of gravel. It hobbled the performance of the emulator, dropping it by three or four orders of magnitude. To be specific, in ICE mode, the emulator effectively ran at a few megahertz. In co-simulation, it achieved at most 1 kHz.

In a typical verification environment, the DUT I/O interface includes thousands of signals, with many switching states within each clock cycle. In simulation, the testbench and DUT communicate via a cycle-accurate, bit-level or signal-level interface, and each I/O signal transition is transferred between the two as it occurs.

In co-simulation, the testbench, processed by the simulator, and the DUT, now in the emulator, communicate via the same cycle-accurate, signal-level interface, and again, each I/O signal transition is exchanged between testbench and DUT as it occurs. This was certainly an advantage from an implementation point of view, as it required no modeling changes, but was a severe disadvantage for performance. In fact, even though the emulator could run orders of magnitude faster, it had to wait for these transfers to complete. Further, the emulator often was stalled by the testbench since it had to wait for the slow testbench to react to incoming signals and produce the next set of stimuli.

The large communication overhead essentially killed overall performance. The actual verification performance was limited by the performance of the host PC, the size and complexity of the testbench, and/or the signal-level interface between the testbench and the DUT:

Today’s testbenches consume more than 50% of the CPU time in simulation – sometimes more than 90% – limiting co-simulation acceleration to less than a factor of two. It’s no surprise that co-simulation never took off, leaving the ICE mode in the position of prominence that it enjoyed from the beginning.

Co-simulation & simulation acceleration with C/C++ testbenches

The EDA industry never sits idle. Time and again, new ideas and engineering feats enhance the design verification landscape. In fact, new verification languages were devised to create ever more advanced testbenches.

A case in point is the use of C/C++ to implement testbenches. The aim is to elevate the abstraction level of the testbench and reduce the impact of the Verilog simulator – the slow link in the chain – from the testbench setup.

The replacement of Verilog with C/C++ testbenches dispensed with the PLI-based communication between testbench and DUT. Vendors resorted to using custom-made APIs based on macros to implement the pin-level or signal-level communication interface.

This testbench approach is advantageous to the deployment of an emulator in charge of the DUT. Now, acceleration factors of up to two digits were possible:

Co-Emulation

Simulation acceleration with C/C++ testbenches at the signal-level improved execution speed close to two orders of magnitude versus PLI-based co-simulation. Still, the emulator – the strong link in the setup – was held back by the testbench – the weak link – and was prevented from using all its underlying processing power.

The breakthrough came by splitting the testbench itself into two parts. A front-end, written at a higher level of abstraction than RTL and executed on the workstation, would implement whatever verification capability was expected from the testbench. A back-end, written in RTL code and synthesized onto the emulator, would implement the testbench I/O protocols – namely, the state-machines that control the countless DUT I/O pin transitions – a compute-intensive task performed much more efficiently in hardware.

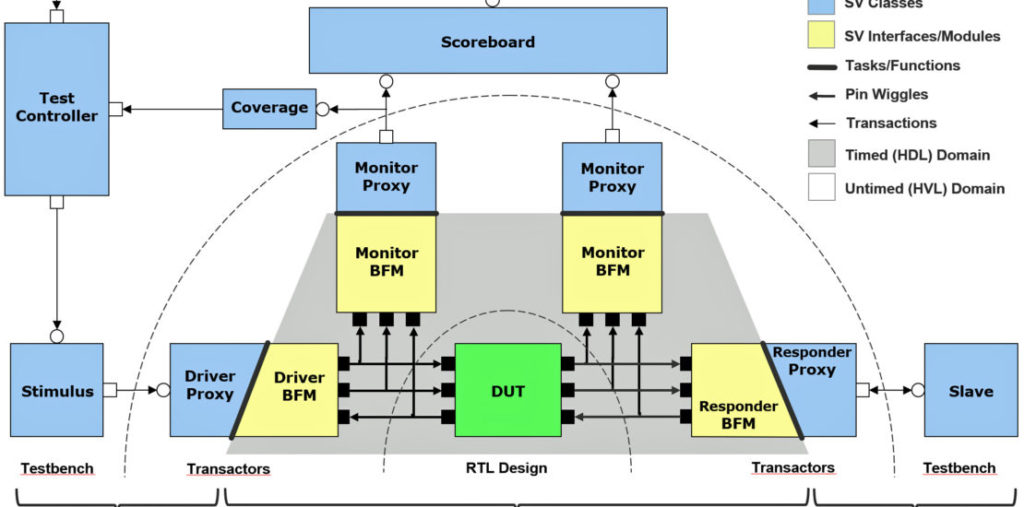

Furthermore, the communication between the front and back ends would come to be multi-cycle transactions instead of signal-level transitions. Today, this is known as a dual-domain environment with transaction-based inter-domain communication. A hardware-based domain, or HDL domain, running in the emulator, and a software-based, or hardware verification language (HVL) domain, executes on the host computer.

Function call-based communication implements transactions, connecting the two parts, both inbound and outbound. The implementation can take several forms, but all should stem from an Accellera standard called SCE-MI, now at version 2.1. SCE-MI is a set of modeling APIs between behavioral models running on a workstation and synthesizable HDL models running on an emulator. The foundation of today’s standard is the SystemVerilog DPI (SV-DPI). The communication between emulator and workstation can be implemented using SCE-MI based DPI import and export functions and tasks, as well as SCE-MI pipe semantics.

The DPI is not afflicted by the drawbacks of the aforementioned PLI standard. Instead, it presents several advantages:

- Much simpler and more intuitive to use.

- API-less (i.e., a user-defined function on one side called from the other side)

- Transaction-oriented instead of signal-oriented, leading to much higher speed.

The two domains require two sets of tools, are generally fed different files, and have different requirements. This scenario leads to increased performance, though the acceleration factor is dependent on the size and frequency of the transactions and function calls, and other factors:

The overall architecture fits well with an emulator, and is dominated by the emulator that now can run at speed. Appropriately, it is called co-emulation.

Three benefits were anticipated from the co-emulation approach. First, writing a testbench at a higher level of abstraction with fewer lines of code would be easier and less error-prone. Second, the workstation would process such lightweight behavioral code significantly faster. Third, the communication between the simulation front-end and the emulation back-end would move from cycle-based, pin-level synchronization to function-based, transaction level synchronization, further reducing stalling of the emulator. And, the bigger the transaction, the fewer synchronization “interruptions”, resulting in faster execution of the overall setup.

Writing Co-Emulation Testbenches and Transactors

A review of a transactor’s characteristics and a highlight of what is required to implement a co-emulation testbench is in order here.

Table 1 compares the characteristics of the two sides.

Table 1 Characteristics of dual domain co-modeling

The transaction-based testbench (a.k.a. HVL) side is behavioral and untimed. It can be time-aware but should not have explicit time-advancement statements like clock or unit delays. Time advancement is executed on the HDL side, though the testbench can control timing indirectly via remote function and task calls. The testbench may be class-based, like a UVM testbench, but doesn’t need to be – well within a verification engineer’s comfort zone.

The HDL side is synthesizable and must bear the limitations of modern synthesis technology: behavioral constructs are not generally supported, for example.

Mentor Graphics enhanced the capability to write BFMs by developing XRTL (for eXtended RTL), a superset of SystemVerilog RTL. It includes various behavioral constructs, such as implicit state machines, behavioral clock and reset generation, DPI functions, and tasks that can be synthesized onto an emulator. The HDL domain is statically elaborated, a familiar capability for most ASIC designers. Mentor calls this scenario TBX (TestBench Xpress), similar to an accelerated transactor, to enable emulation with modern testbenches.

Benefits of Transactors

Transactors allow the emulator to process data continuously with minimal stalling, dramatically increasing overall performance over PLI-based acceleration, and approaching the performance of ICE.

Co-emulation offers several advantages over ICE. It eliminates the need for speed-rate adapters and physical interfaces. With co-emulation, each physical interface is replaced with a virtual/logical transaction-level interface. Likewise, speed-rate adapters, required for ICE, are replaced with protocol-specific transactors.

Unlike speed-rate adapters, transactor models for the latest protocols are readily available off-the-shelf and easily upgraded to accommodate protocol revisions. Vendors and users provide libraries of transactors for standard interface protocols as well as tools to enable the development of custom, proprietary transactors.

It’s also possible to create an emulation-like environment by using transactors to connect the DUT to “virtual devices.” A virtual device is a software model of a peripheral device that runs on the workstation.

An additional merit of co-emulation is remote accessibility. As there are no physical interfaces connected to the emulator, a user can fully use and manage it from anywhere in the world.

Transaction-based acceleration led to speed-ups of three to four orders of magnitude over simulation. It finally gave design teams access to the full performance of the emulator without sacrificing much, if any, of the flexibility/visibility of simulation. Namely, it achieved the best of both worlds.

Co-Emulation with UVM

The dual-domain partitioning is required for co-emulation, but works perfectly well for simulation. The architecture is verification methodology-neutral. It readily fits a methodology like UVM since UVM has largely the same layering principles. The transactor layer is affected here, but the BFM proxies make this largely transparent to the UVM or modern testbench domain.

In terms of verification productivity, the combination of UVM and co-emulation provides horizontal and vertical reuse benefits from UVM, and reuse across simulation, emulation, FPGA, and other platforms.

Conclusions

Platform-portable, emulation-compatible transactors offer a unique combination of performance, accessibility, flexibility, and scalability. Transactors support the development of a realistic system-level test environment for the DUT. They also enable rapid creation of a high-speed, system-level virtual platform by enveloping the emulated DUT with virtual components interacting with its multitude of interfaces.

The use of transactors delivers all the benefits of ICE without the challenges of rate-adapter availability and physical accessibility. No more “spaghetti cables!”

By adopting co-emulation for testbench acceleration, design teams can move their verification strategy up a level of abstraction, and achieve the verification performance and productivity necessary to fully debug and develop the most complex electronic hardware and software-based systems.