In an interview with Lauro Rizzatti, former CEO of EVE, Luc Burgun, explains how he crossed the bridge between hardware emulation and high-frequency trading.

Source: EETimes

In an interview with Lauro Rizzatti, former CEO of EVE, Luc Burgun, explains how he crossed the bridge between hardware emulation and high-frequency trading.

It’s long been assumed that Electronic Design Automation (EDA) software and hardware could be applied to market segments other than semiconductor design. Former Emulation Verification Engineering (EVE) CEO Luc Burgun did just that. In an interview with verification expert Lauro Rizzatti (former general manager of EVE-USA), Luc explains how he crossed the bridge between hardware emulation and high-frequency trading (HFT) by riding the Field Programmable Gate-Array (FPGA) wave to NovaSparks.

Lauro: Four years have passed since you sold EVE to Synopsys. You started EVE in 2000 with the goal of creating a successful business developing a hardware emulator for pre-silicon testing of semiconductor designs. The challenge was to use commercial FPGAs. At that time, this field was dominated by two gorillas — Cadence and Mentor Graphics — both using custom silicon in their emulation platforms. The decision to use commercial FPGAs put EVE on collision route with the two giants. In fact, in the early years, EVE was viewed as a doomed startup with no chance of success. You proved the skeptics wrong.

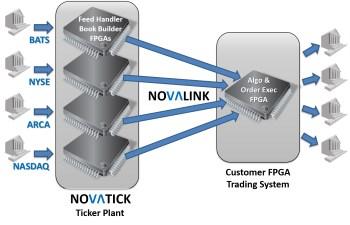

After selling EVE to Synopsys, you joined NovaSparksas CEO. NovaSparks is the only company offering 100% hardware-based appliances for high-frequency trading of stocks, bonds, commodities, futures, and options. All other approaches are either 100% software-based or hybrid solutions combining hardware with software.

So, can you please tell us what drove your decision to join NovaSparks?

Luc: In 2012, I was invited to join the board of NovaSparks by Nicolas Meunier, an investor I worked with at EVE. NovaSparks was developing appliances for high-frequency trading using FPGAs to accelerate the trading process, and I saw an opportunity to reuse my technical background (i.e., the use of FPGAs to accelerate semiconductor design verification) to address a completely new market. Obviously, I like challenges, and bridging the gap between the EDA world and the Financial world was too good to pass. Few months later, I had the opportunity to become CEO and, with excitement, I took the chance to drive the company to the next level.

Lauro: Please, explain how FPGAs can be used in the financial trading business.

Luc: Automation in the financial trading business started with NASDAQ in 1971. Over time, the use of computers spread over the entire trading cycle, leaving only the basic trading decisions to humans. Trading decisions include the type of transactions (sell/buy/cancel), the amount of shares of a given stock/option/bond/future, the trading market to perform the transaction such as NASDAQ, NYSE, etc.

Since the end of the 1990s, the trading decisions have started to be made by software running on computer farms (algorithmic trading) in colocation; i.e., in the data center of the financial markets. And more recently by hardware such as an FPGA implementing the algorithmic trading. This is where NovaSparks plays a role. We process and forward the market data to trading servers or trading FPGAs.

Lauro: What are the benefits of using a pure hardware-based solution to access the markets? What are the drawbacks of the alternatives?

Luc: It’s mainly a matter of performance. With a 100%-based hardware solution implemented via commercial FPGAs, we can offer significantly lower latency to access and decode the market data coming from the financial markets and to generate the order book updates. Latency is the time elapsed between the time a data packet is distributed by the financial market and the time it is received as an order book update by the trading server. Our latency is less than one micro-second that is about five to 10 times faster than when the same functions are performed in software.

This is a big deal when you trade. You want to be the first to analyze the market data and place your buy/sell orders and/or cancellation. But FPGAs offer additional benefits. They are better in terms of reliability — once configured, like any function implemented in hardware, they are less prone to bugs since they provide a more stable environment. Just keep in mind that application software can malfunction if you upgrade the underlying operating system and, more generally, if you change anything in the software environment of your server.

Lauro: That being the case, why are trading firms or banks still using software-based solutions to access the markets?

Luc: Most financial markets became automated in the 1990s. At that time, only a software implementation to access and decode the financial market data and build the order book was practical. FPGAs were around a decade earlier, but they did not offer enough capacity for implementing a trading task in a single FPGA. At the beginning of the new millennium, new generations of FPGAs with enough capacity started to appear. However, the programming of such FPGAs required sizeable resources in time and in design talent.

Today, there are more than 200 different financial markets around the world and, unfortunately, the market data is not normalized across the markets. Few markets use the same format; most of them use their own formats. To serve such a large and fragmented financial landscape, you end-up developing a lot of different FPGA firmware or programming code. The scenario is further compounded by frequent market format changes. The above dramatically increased the pressure on the trading process providers to assure that clients can adopt new formats without taking any risk.

In the end, it’s a matter of time-to-market. The major benefit of a software-based trading process is flexibility to incorporate changes. These changes may involve supporting a new market or updating to a new market data format. It takes less time to implement a change in a software algorithm than to reprogram an FPGA. Any market data format change in software can be realized in a few days. In an FPGA, the change involves rewriting the FPGA programming code, compiling the FPGA, and testing the programming code in the FPGA. The whole process may take a few weeks.

Despite the advantages discussed earlier, the above plays against adopting FPGAs. At NovaSparks, we invested time and resources to put in place a fast development methodology. The result is that we reduced our time-to-market to something that is comparable to software solutions.

This process also requires a different skillset than that of a software designer. We employ hardware designers with knowledge in firmware development of re-programmable hardware. Few designers fit the profile, making it harder to find the right engineers. By the way, the few potential candidates would never believe there are great opportunities in the finance industry!

Lauro: You are using commercial FPGAs, like you did at EVE. Can you elaborate a bit on this choice?

Luc: If the question is, why did we chose commercial FPGAs instead of developing a custom hardware solution, let me say that designing dedicated hardware for this application would be a bad economical decision. Volumes are too small to justify an investment of several hundreds of million dollars, which is the development cost that Xilinx and Altera (now Intel) sustain when creating new FPGA generations. And, even so, the solution would not keep up with the latest process node technologies. Only commercial FPGAs can leverage the latest process node. Xilinx Ultrascale+ and Altera Stratix 10 use the latest 14nm process node and offer the performance/capacity we need.

More to the point, the cost of implementing the changes I discussed above once your design is cast in silicon would be astronomical, namely, prohibitive.

To explain our decision, a bit of history here is important. At EVE we used Xilinx FPGAs since the main drivers for a hardware emulator were capacity and time to compile. The bigger the FPGAs, the larger the emulator capacity can be with the same amount of devices. Also, using larger capacity than what we strictly needed allowed us to reduce the density or filling rate of the FPGA with a dramatic improvement on the design compile time. A customer could typically emulate an IC design on 100 FPGAs and run/compile/debug in a relatively short amount of time — minutes or hours, not days.

At NovaSparks, the driver is I/O performance; i.e., the latency/bandwidth of the Ethernet/PCIe/Memory interfaces. That being said, Xilinx has been improving the I/O performance on its Ultrascale generation, while Altera seems to focus more on capacity in its next generation. It’s critical for us to make sure that we are not dependent on a single FPGA provider. Our RTL code and our architecture have been designed to be portable.

Lauro: Do you see any difference in terms of development methodology between emulation and fast speed trading?

Luc: First of all, the quality of what we develop is critical as we are part of the production environment. Our customers must get the right market data so that they can place their orders on the markets safely. This forces us to focus on testing what we deliver. In the end, we ship an hardware appliance with some firmware/software and the customer interacts with it through APIs to configure/monitor the appliance and get the market data.

On a side note, we use the Very High Speed Integrated Circuit Hardware Description Language (VHDL) for FPGA programming, together with some VHDL generators, because this is essential to reach the level of quality and productivity we need.

Second, if we look at the development methodology, it is different from what we used to do at EVE. On the one hand, it is less a collective effort since a feed handler (the function performed by the appliance to connect the trading companies to the markets) for a specific market can be developed by a single engineer. Since we must have a simultaneous release for all 40 different markets we serve at any given time, we need to make sure everything is perfectly aligned. This is where the challenge resides.

Lauro: What do you expect in the near future?

Luc: Let me make an observation. The high-frequency trading market has a different dynamic than the EDA market. Trading companies, such as banks, hedge funds or proprietary trading firms, are more conservative, stretching the sales cycle well beyond that of the semiconductor industry. Also, we play a role in the production environment. Emulation is one of several tools that electronic developers use for design verification prior to silicon. In the financial world, you really need to convince your customers that you are a serious alternative to the software solutions in the long run. The good news is that there is competition among our customers and offering the lowest latency is paramount.

Looking at the future, we expect a steady growth, conservatively in the 25 to 30% range. We have some interesting upsides from expanding our market coverage to new geographies (mainly in Asia), and from addressing new asset classes such as equity options and bonds. Equity options is really challenging for software solutions because the number of instruments can be two orders of magnitude bigger than for equity markets. Only very large FPGAs like the Xilinx Ultrascale+ or Altera Stratix10 would allow us to play in the equity option field. But the outcome can be huge.

Lauro: In closing, is there anything else you wish to share with us?

Luc: It’s good to be exposed to other industries. A year ago, I joined the board of a startup using magnetic cooling in refrigeration systems that eliminates compressors and polluting gases. Refrigerators and air conditioning based on magnetic cooling also consume 50% less energy than with compressors. Magnetic cooling is a green dream for any environmentalist.

EDA is great, but life is short and there is no reason to devote all your life to the same industry! Pareto might be right to assert that “You make more money if you always work for the same industry. Simply because you monetize better your expertise,” but I value more what I learn than what I earn.

Dr. Lauro Rizzatti is a verification consultant and industry expert on hardware emulation (www.rizzatti.com). Previously, Dr. Rizzatti held positions in management, product marketing, technical marketing, and engineering. He can be reached at lauro@rizzatti.com.