Source: EDACafé

With due differences in subject matter – classic opera versus chip design verification – and, in a judgement call, a trivial farce versus expensive and hard to use, I see a similarity with what’s happening with hardware emulation.

First devised in the middle of the 1980s, driven by the progress in field programmable gate-array technology, hardware emulation had a very difficult time to be accepted, and for good reasons. Not only it was very expensive to purchase, it was also atrociously unfriendly to be deployed. Only pioneering engineering teams –– dealing with very large designs that were processors and graphics back then–– had the stomach (and deep pockets) to adopt such technology.

Fast forward to the second decade of the new millennium, hardware emulation has been adopted by engineering teams across the entire spectrum of chip designs, from processors/graphics, to multimedia, wireless/mobile, networking, storage, aerospace, automotive, medical, and, of course, IoT.

Appreciation for Mozart’s opera over time has been entirely due to better musical education of the populace, namely, a change in the taste of the public. The popularity of hardware emulation is owed to radical improvement in the product and dramatic change within the semiconductor design landscape.

Few characteristics of hardware emulators will help to put the above in the proper perspective.

The setup time of an emulator in the very old days took several months, often pushing the time-to-emulation way past the availability of silicon, defeating its purpose. Today, it may take at most a couple of weeks, often less than a week.

In the same time span, the cost of emulation has decreased two orders of magnitude, from one dollar per gate, to one penny per gate. And, more to the point, it is the least expensive verification engine when measured on a dollar-per-verification-cycle basis.

The usage has expanded from one deployment mode (in-circuit-emulation) to several modes covering a range of verification objectives. ICE has been flanked by three acceleration modes (transaction-based acceleration, cycle-based acceleration, synthesizable testbench acceleration, and embedded software acceleration). A mix of the above modes can be used for hardware debugging, hardware/software integration, embedded software validation, system validation, low-power verification, power estimation, performance characterization, with more to come.

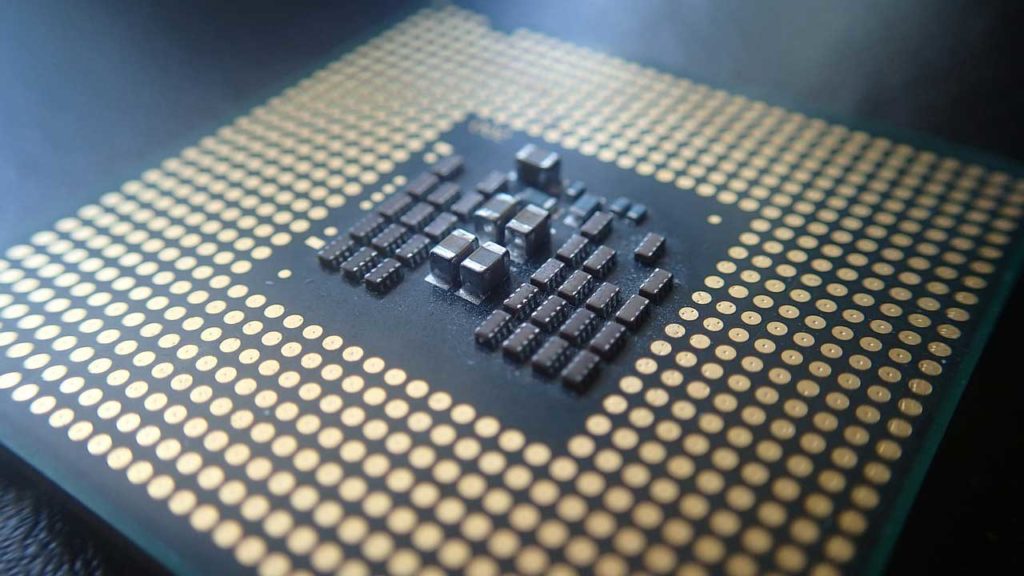

Turning our attention to the design landscape, sizes of modern designs routinely surpass the 100-million gate threshold, with the largest well over one-billion gates.

This level of complexity stems from the fabric of modern designs that includes multi-core CPUs, GPUs, NOCs, custom logic and a plethora of peripheral interfaces. The complexity is further pushed upward by the vast amount of embedded software, from drivers to operating systems, diagnostics and apps. Just consider one data point: for every hardware design, today there are five or more software developers.

No surprise that hardware emulation became a crucial verification tool. At less than 10 cycles-per-second, the beloved HDL simulator would take years to verify exhaustively a modern SoC design. An emulator can do the job in less than one week when used 24/7 on one design.

What’s more, unlike its great-grand parents, today’s emulators can be used remotely serving multiple concurrent users.Not only do they cost less than in the early days, but multiple usages, multiple users, and remote access concur to dramatically increase their return on investment.

Così fan tutte, loosely translated to “Thus Do They All,” premiered in 1790 in Vienna. While hardware emulation didn’t take as long to become popular, I paraphrase Peter G. Davis: “Hardware emulation was virtually unknown three decades ago, considered expensive and unfriendly to use. Now it is well-regarded as a critical and mandatory chip design verification apparatus in the toolbox and any design engineering team.” Thus do they all use hardware emulation for today’s complex SoC designs.