Source: Electronic Design

Misconceptions and outright falsehoods can skew the perception of hardware emulation. These include:

1. Hardware emulators are verification tools relegated to the backroom and used only on the most complex designs that need more rigorous debugging.

This was true in the early days of their existence in the late 1980s and early 1990s until roughly the turn of the millennium. For the past 15 years or so, this has not been the case. Several technical enhancements and innovations made this conversion possible. New architectures, new capabilities, and simplified usage eased and propelled their deployment in all segments of the semiconductor industry, from processor/graphics to networking, multimedia, storage, automotive, and aerospace.

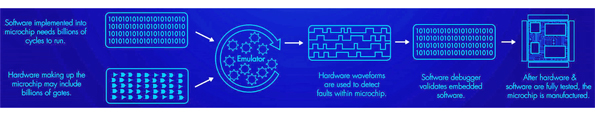

Today, emulators are used for designs of any size and any type. They can verify the hardware, integrate hardware and embedded software, as well as validate the embedded software and the entire system-on-chip (SoC) design.

2. Project teams eschew hardware emulation because of its “time-to-emulation” reputation. Setting up the design and tweaking the knobs on an emulator to get it ready is too much of an effort for the results.

Again, this was true for many years, but not any longer. The progress in the compilation technology has simplified and improved the mapping of a design under test (DUT) onto the emulator. The time-consuming process to prepare the DUT, compile it, and set up the emulator has dropped from several months to few days or even to a single day when designs are less complex, or when designs are derivatives of a new, complex design.

3. Hardware emulators are very expensive to acquire and to maintain.

It was true in the old days, not so today. Today, the acquisition cost of a modern emulator pales against the verification power and flexibility of the tool. A hardware emulator is the most versatile verification engine ever developed. It has the performance and capacity necessary to tackle even the most complicated debugging scenarios, which often include embedded software content. Just consider, from five dollars per gate in the early 1990s, the unit cost now hovers around a couple of cents per gate or less.

As strange as it may sound, the tool’s versatility makes hardware emulation the cheapest verification solution when measured on a per-cycle basis.

The total cost of ownership also has dropped significantly. Gone are the days when, figuratively, the emulator was delivered with a team of application engineers in the box to operate and maintain it. The reliability of the product has improved dramatically, reducing the cost of maintenance by orders of magnitude. In addition, the ease of use has simplified its usage.

4. Hardware emulation is used exclusively in in-circuit emulation (ICE) mode.

For the record, in ICE mode, the DUT mapped inside the emulator is driven by the target system, where the taped-out chip would eventually reside. This was the deployment mode that drove emulation’s conception and development––namely, test the DUT with real-world traffic generated by the physical target system.

While this mode is still employed by many users, it’s not the only way to deploy an emulator. In addition to ICE, emulators can be used in a variety of acceleration modes. They can be driven by software-based testbenches via a PLI interface, not popular because of the limited acceleration, but still usable to shorten the design bring-up when switching from simulation to emulation. Or, via a transaction-based interface (TBX or TBA), whose popularity grows since the acceleration factor is in the same ballpark of ICE, at least for some of the current emulators crop. They can be used in standalone mode (SAA) by mapping a synthesizable testbench inside the emulator together with the DUT. And they can accelerate the validation of embedded software stored in on-board or in tightly connected memories. Or, they can be used with combinations of the above.

5. Hardware emulation is useless in transaction-based acceleration mode.

A still widespread misconception is that the transaction-based approach does not work or, in the best case, is limited in performance when compared with ICE. The concept was devised by IKOS Systems in the late 1990s and it worked. After Mentor Graphics acquired IKOS, it improved upon and pushed the technology under the name of TBX as a viable alternative to ICE.

The emulation startup EVE (Emulation Verification Engineering), where I was general manager and vice president of marketing, adopted it as the main deployment mode from its founding. Both Mentor and EVE proved that the transaction-based acceleration mode not only works, but can perform as fast as, and possibly faster, than ICE.

Another and unique benefit of transaction-based acceleration is the ability to create a virtual test environment to exercise the DUT that supports corner-cases analysis, what-if analysis, and more that are not possible in ICE. An example is the VirtuaLAB implementation by Mentor Graphics. VirtuaLab models an entire target system, such as USB, Ethernet, or HDMI, in a virtual environment.

6. Hardware emulators are replacing HDL simulators.

Not only this is false, but it will never happen. The fast setup and compilation of hardware-description-language (HDL) simulators, plus their inherent advantages in flexible and thorough design debugging capabilities, make them unique as the best verification tool in the industry with one significant caveat: They suffer from performance degradation when design sizes increase to the point where they run out of steam at sizes of hundreds of million gates. Here is where the emulator comes to the rescue and takes over.

Given that design sizes will continue to grow and no enhancement in the HDL simulator’s speed of execution can be expected on large designs, emulators will become the only possible vehicle for design verification at the system level. Simulators will continue to be used at the intellectual-property (IP) and block level.

You may ask, what about simulation farms?

In simulation farms, a large DUT is not broken down into pieces and distributed over a large number of PCs, where each workstation executes a piece of the DUT. This approach has been tried over and over for more than 25 years without an appreciable degree of success.

Instead, each workstation in the farm executes a copy of the same design, as large as it may be. Each copy of the design is exercised by a different, self-contained and independent testbench. Hence, the design size still rules the day. Simulation farms are popular for regression testing with very large sets of testbenches.

The usage ratio of simulators over emulators may be 80% versus 20%. My prediction is that in 10 years, the ratio will swap, with 20% of usage for simulators and 80% for emulators.

7. The only difference between hardware emulation and FPGA prototyping is in the name. FPGA prototyping can and will replace hardware emulators.

This is a false statement. While emulators may use and, indeed, some do use FPGA devices, the differences between the two tools are staggering.

FPGA prototypes are designed and built to achieve the highest speed of execution possible. When built in-house, each prototype often is optimized for speed targeting one specific design. They trade off DUT mapping efforts, DUT debugging capabilities (limiting them to a bare minimum that’s often useless), and deployment flexibility and versatility. They’re used for embedded software validation ahead of silicon availability and for final system validation.

Regardless of the technology implemented in an emulator—custom processor-based, custom emulator-on-chip-based, commercial FPGA-based—they share several characteristics that set them apart from an FPGA prototype board or system. For example:

• The time spent in DUT mapping and compiling must be worlds apart from the time spent for the same task in an FPGA prototyping system. It is days versus months.

• Emulators are targeting hardware debugging and, therefore, support 100% visibility into the design without requiring compilation of probes. Differences exist between emulators in this critical capability, but they’re not significant when compared to those of an FPGA prototyping system.

• Emulators can be used in several modes of operation and support a spectrum of verification objectives, from hardware verification and hardware/software integration to firmware/operating-system testing and system validation. They can be used for multi-power domain design verification and can generate switching activity for power estimation.

• Emulators are also multi-user/job engines. FPGA prototyping systems are used solely by one user processing one job at a time.

All of the above explains why FPGA prototypes will never replace emulators.

8. Hardware emulators must be installed at one site location and cannot be used remotely–– for instance, as a datacenter resource.

This was true with the early emulators, but not anymore. All current emulators can be accessed remotely. However, in ICE mode, the approach is cumbersome, since it requires manned supervision to install and switch speed-rate adapters when a different design is uploaded in the emulator. In fact, multi-users or multi-jobs in ICE mode make remote access an awkward deployment.

ICE mode notwithstanding, multi-user, remote access (in TBX/TBA or SAA) and large configurations are at the foundation of emulation datacenters. Obviously, management software is required for efficient and smooth operations, and here is where the difference lies between various emulator implementations.

9. Verifying embedded software in a SoC is not supported by emulation, which means hardware/software co-verification is impossible with the tool.

The truth is the opposite. Emulation is the only tool that can perform the daunting task.

To verify the interaction of embedded software, including firmware and operating systems, on the underlying hardware (SoC with single or multiple CPUs), a verification engineer needs three main ingredients:

• A cycle-accurate representation of the design to be able to trace a bug anywhere in the SoC. Emulators offer the most accurate design representation, short of real silicon.

• Very high speed of execution in the several hundreds of kilohertz or megahertz range––the faster the better. Emulators achieve those speeds.

• Full visibility into the hardware design. Emulators provide 100% visibility into the design, although there are differences in the access speed among the emulators.

10. Power estimation is a critical verification task, but hardware emulation doesn’t have the capabilities to analyze the power consumed by an SoC.

Another false statement. Power-consumption analysis is based on tracking the switching activity of all elements inside the design. The more granular the design representation, the more accurate the analysis. Unfortunately, the higher granularity hinders the designer’s flexibility to make significant design changes to improve the energy consumption. This can best be achieved at the architectural level.

Power-consumption analysis at the register transfer level (RTL) and gate level of modern SoC designs can be best accomplished with emulation. Only emulation has the raw power to process vast amounts of logic and generate the switching activity of all its elements.

11. All hardware emulators are created equal.

Today, all hardware emulators share many characteristics, and they all perform their jobs. However, some are better in certain modes than others.

From an architectural perspective, differences exist in their technological foundation. The three commercial offerings from the three main EDA suppliers include:

• Custom processor-based architecture: Devised by IBM, it has been a proven technology since 1997 and dominated the field in the decade from 2000-2010. Advantages include fast compilation, good scalability, fast speed of execution in ICE mode, support from a comprehensive catalog of speed bridges, and excellent debugging. Drawbacks are limited speed of execution in TBA mode, large power consumption, and larger physical dimensions than an emulator based on commercial FPGAs of equivalent design capacity.

• Custom emulator-on-chip architecture: Pioneered by a French startup by the name of Meta Systems in the mid-1990s, the emulator-on-chip architecture is based on a highly optimized custom FPGA that includes an interconnect network for fast compilation, which also enables correct-by-construction compilation. Design visibility is implemented in the silicon fabric that assumes 100% access without probe compilation and rapid waveform tracing. It has a few drawbacks; namely, it requires a farm of workstations for fast compilation and has somewhat slower speed and larger physical dimensions than an emulator based on commercial FPGAs of equivalent design capacity.

• Commercial FPGA-based architecture: First used in the 1990s, it lost appeal vis-à-vis the custom processor-based architecture because of several shortcomings. In the past 10 years or so, the new generations of very large commercial FPGAs have helped to overcome many of the original weaknesses. Its physical dimensions and power consumption are the smallest and lowest for equivalent design capacity. It also achieves faster execution speed when compared to the other two architectures. Among the drawbacks, its speed of compilation is lower than the other two architectures, at least on designs of 10 -million gates or less. The full design visibility is achieved by trading off the higher speed of emulation.

All three offer scalability to accommodate any design size, from IP blocks all the way to full systems of over a billion gates. They support multi-users, though the custom processor-based architecture can accommodate the highest number of users. They also support all deployment modes and verification objectives.